½ арьжс ы ь ср

advertisement

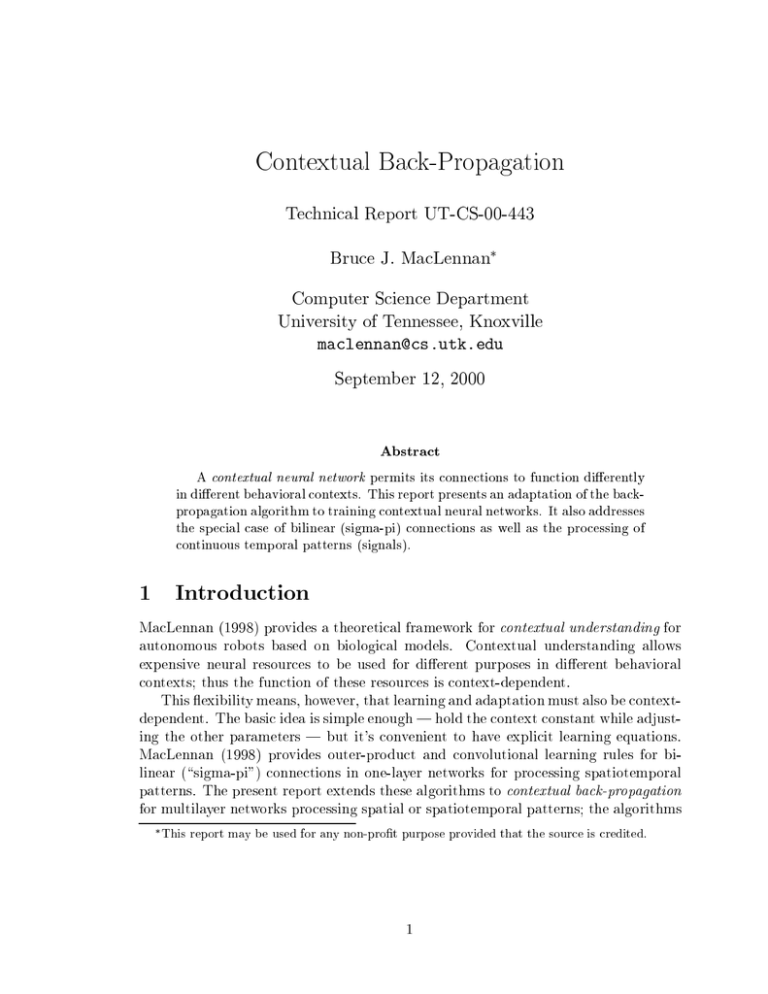

Contextual Bak-Propagation

Tehnial Report UT-CS-00-443

Brue J. MaLennan

Computer Siene Department

University of Tennessee, Knoxville

malennans.utk.edu

September 12, 2000

Abstrat

A ontextual neural network permits its onnetions to funtion dierently

in dierent behavioral ontexts. This report presents an adaptation of the bakpropagation algorithm to training ontextual neural networks. It also addresses

the speial ase of bilinear (sigma-pi) onnetions as well as the proessing of

ontinuous temporal patterns (signals).

1 Introdution

MaLennan (1998) provides a theoretial framework for ontextual understanding for

autonomous robots based on biologial models. Contextual understanding allows

expensive neural resoures to be used for dierent purposes in dierent behavioral

ontexts; thus the funtion of these resoures is ontext-dependent.

This exibility means, however, that learning and adaptation must also be ontextdependent. The basi idea is simple enough | hold the ontext onstant while adjusting the other parameters | but it's onvenient to have expliit learning equations.

MaLennan (1998) provides outer-produt and onvolutional learning rules for bilinear (\sigma-pi") onnetions in one-layer networks for proessing spatiotemporal

patterns. The present report extends these algorithms to ontextual bak-propagation

for multilayer networks proessing spatial or spatiotemporal patterns; the algorithms

This

report may be used for any non-prot purpose provided that the soure is redited.

1

are derived for general (dierentiable) ontext dependenies and for the spei (ommon) ase of bilinear dependenies.

I have tried to strike a balane in generality. On the one hand, this report goes

beyond the simple seond-order dependenes disussed in MaLennan (1998). On the

other, although the derivation is straight-forward and ould be done in a very general

framework, that generality seems superuous at this point, and so it is limited to

bak-propagation.

2 Denitions

The ontext odes are drawn from some spae, typially a vetor spae, but this

restrition is not neessary.

The eetive weight W of the onnetion to unit i from unit j is determined by

the ontext and a vetor of parameters Q assoiated with this onnetion. The

dependene is given by:

def

W = C (Q ; );

(1)

for some dierentiable funtion C on parameter vetors and ontexts. In a simple

partiular ase onsidered below (setion 3.2), C (Q ; ) = QT .

We will be dealing with an N -layer feed-forward network in whih the l-th layer

has L units (\neurons"). We use x to represent the ativity of the i-th unit of the

l-th layer, i = 1; : : : ; L . We often write the ativities of a layer as a vetor, x . The

output y of the net is the ativity of its last layer, y def

= x , and the input x to the

def

0

net determines the ativity of its \zeroth layer," x = x.

The ativity of a unit is the result of an ativation funtion applied to a linear

ombination of the ativities of the units in the preeding layer. The oeÆients of

the linear ombination are the eetive weights. Thus the linear ombination for unit

i of layer l is given by

X1

def

W x 1:

(2)

s =

ij

ij

ij

ij

ij

ij

l

l

i

l

l

N

Ll

l

l

l

i

ij

j

j

=1

That is, s = W x 1. The ativities are then given by

def

x = (s ); l = 1; : : : ; N;

whih we may abbreviate x = (s ). The eetive weights are given by Eq. 1.

We may then write the network as a funtion of the parameters and ontext,

y = N (Q; )(x), and our goal is to hoose the parameters Q to minimize an error

measure.

For training we have a set of T triples (p ; ; t ), where p is an input pattern, is a ontext, and t is a target pattern. The goal is to train the net so that pattern

p maps to target t in ontext , whih we may abbreviate

p 7! t ; q = 1; : : : ; T:

l

l

l

l

l

i

i

l

l

q

q

q

q

q

q

q

q

2

q

q

q

q

In eet, the ontext is additional input to the network, so we are attempting to map

(p ; ) 7! t . Contextual bak-propagation, however, is not simply onventional

bak-propagation on the extended inputs (p ; ), sine we must allow interations

between the omponents of p and .

Thus our goal is to nd Q so that t is as nearly equal to N (Q; )(p ) as possible.

Therefore we dene a least-squares error funtion:

q

q

q

q

q

q

q

q

E (Q) def

=

X

T

q

=1

kt

q

q

y k2 = X kt N (Q; )(p )k2:

T

q

q

q

q

q

q

=1

3 Contextual Bak-Propagation

The basi equation of gradient desent is Q_ = 21 rE (Q). Therefore we begin by

omputing the gradient of the error funtion, so far as we are able while remaining

independent of the speis of the C funtion:

X

rE = r kt y k2

X

= rkt y k2

X

= 2(t y )T d(t dQ y )

X

= 2 (t y )T ddyQ

X

= 2 (t y )T ddQ N (Q; )(p ):

Hene,

Q_ = X(t y )T ddQ N (Q; )(p ):

For online learning, omit the summation. Dene the hange resulting from the q-th

pattern:

def= (t y )T ddyQ :

This is a matrix of derivatives,

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

y

= (t y )T Q

l

q

q

q

ijk

l

ijk

where Q is the k-th (salar) omponent of Q .

l

l

ijk

ij

3

;

q

3.1

General Form

Sine

y

(t y )T Q

= (t y )T sy

it will be onvenient to name the quantity:

def

Æ = (t

y )T sy :

q

q

q

q

q

sli

l

i

Qlijk

q

l

ijk

;

(3)

q

l

q

q

i

l

i

Thus,

s

= Æ Q

:

The partials with respet to the parameters are omputed:

(4)

l

q

l

l

ijk

i

i

l

ijk

=

sli

Qlijk

=

X

Qlijk

j0

X

Q

l

ijk

j

0

1

Wijl 0 xlj 0

C

(Q

l

ij

)

0 ; xjl 0 1

(Q ; )x

Q

(Q ; ) x 1:

= CQ

=

l

ijk

C

l

l

ij

j

1

l

Hene we may write,

=

Q

sli

C

ij

l

l

ijk

j

(Q ; ) x

l

1:

Q

Therefore, the parameter update rule for arbitrary weights is:

(5)

= Æ x 1 C(QQ ; ) ;

whih we may abbreviate = [Æ (x 1)T℄^ [C (Q ; )= Q ℄, where ^ represents

omponent-wise multipliation [(u^ v) def

= u v ℄.

l

ij

ij

l

l

ij

j

l

q

q

l

l

l

ij

ij

i

j

l

ij

l

l

l

l

l

It remains to ompute the delta values; we begin with the output layer l = N .

Sine the output units are independent, y =s = 0 for j 6= i, we have

y

Æ = (t

y )T s

= (t y ) ddsy

The derivative is simply,

dy = dx = d ( s ) = 0 ( s ) :

ds ds

ds

n

n

n

q

N

j

i

q

q

N

q

q

i

N

i

q

N

i

i

N

i

q

q

i

i

i

N

i

N

i

N

i

N

i

4

N

i

Thus the delta values for the output layer are:

Æ = (t

y ) 0 (s );

(6)

whih we may abbreviate Æ = (t y )^ 0 (s ).

The omputation for the hidden layers (0 1 < N ) is very similar, but makes

use of the delta values for the subsequent layers.

N

q

q

N

i

i

i

i

N

q

q

N

= (t y )T sy

Æil

q

q

q

l

i

= (t y

q

=

X

=

X +1

Æ

m

q

l

y

q

slm+1

+1 s

=1 s

+1

)T s +1 ss

s +1

y

m

(t y

q

+1

X

Ll

)T

q

l

i

l

m

l

q

m

l

i

l

m

l

:

m

m

sli

m

The latter partials are omputed as follows:

slm+1

s

=

l

i

X

s

0

l

i

X

=

i

0

l+1 l

Wmi

0 xi0

i

l+1

Wmi

0

xli0

sli

dx

ds

+1

W

0 (s ):

Hene the delta values for the hidden layers are omputed by:

X +1 +1

Æ = 0 (s )

Æ W

;

=

=

l+1

Wmi

l

i

l

i

l

l

mi

i

l

l

l

i

i

m

l

mi

m

(7)

whih we may abbreviate Æ = 0(s )^ [(W +1)TÆ +1℄. Combining all of the preeding

(Eqs. 6, 7, 5), we get the following equations for ontextual bak-propagation

with arbitrary weights (showing here the updates for a single pattern q):

Æ

= 0 (s )(t y );

(8)

+1

X

Æ +1 W +1

(for 0 l < N );

(9)

Æ = 0 (s )

=1

= Æ x 1 C (Q ; ) :

(10)

l

l

l

l

N

N

q

q

i

i

i

i

Ll

l

l

l

i

i

m

l

mi

m

q

l

l

l

ij

i

j

l

ij

Q

l

ij

5

3.2

Bilinear Connetions

Next we onsider the speial ase in whih the ontext dependent weights are simply

bilinear interations between unit ativities and omponents of a ontext vetor,

def

C (Q ; ) = QT :

In this ase the partial derivative is simply,

X

C (Q ; )

= Q 0 0 = :

ij

ij

l

ij

l

Q

Q

l

ijk

l

ijk

k

ijk

0

k

k

Hene, the parameter update rule for bilinear weights is,

= Æ x 1 ;

(11)

whih we may abbreviate = Æ ^ x 1 ^ , where \^" represents outer produt:

(u ^ v ^ w) def

= uv w .

q

q

ijk

i

j

l

l

l

ijk

i

j

l

l

k

l

k

4 Spatiotemporal Patterns

Next, the preeding results will be extended to proessing spatiotemporal patterns,

in partiular, ontinuously varying vetor signals. Thus, the outputs and targets will

be vetor signals, y(t), t(t), as will the unit ativities, x (t), and assoiated quantities

suh as s (t). The parameters Q will not be time-varying, exept insofar as they are

modied by learning (i.e., they vary on the slow time-sale of learning as opposed to

the fast time-sale of the signals).

The simplest way to handle time-varying inputs is to make them disrete: y(t1 ),

y(t2), ..., y(t ) et.; then the time samples simply inrease the dimension of all the

vetors, and the preeding methods may be used. Instead, in this setion we will take

a signal-proessing approah in whih ontinuously-varying signals are proessed in

real time.

To begin, the error measure must integrate the dierene between the output and

target signals over time:

X

XZ 0

2:

k

t

(

t) y (t)k2 dt =

k

t

y

k

E (Q) def

=

1

l

l

i

n

q

q

q

q

The gradient is then easy to ompute:

XZ 0

rE =

rkt (t) y (t)k2 dt

1

q

q

XZ 0

q

q

q

[t (t) y (t)℄T[ y (t)= Q℄dt

= 2

1

X

= 2 h(t y )T; y = Qi:

q

q

q

q

q

q

q

6

q

Therefore, we an derive the hange in a parameter :

q

q

*

ijk

ijk

(t y

q

= (t y

q

= (t y

q

def

=

l

q

*

l

q

y

+

q

Qlijk

)T; y

q

sli

sli Qlijk

*

q

)T;

)T y;

q

sli

+

+

:

sli Qlijk

The delta values are therefore time-varying:

def

Æ (t) = [t (t) y (t)℄T y (t)=s (t):

Thus,

= hÆ ; s =Q i:

The onnetion W to unit i from unit j will be modeled as a linear system, whih

an be haraterized by its impulse response H ,

W x (t) = H (t) x (t);

where \

" represents (temporal) onvolution. The impulse response is dependent on

the parameters and ontext, H = C (Q ; ). Thus, multipliation in the stati ase

(Eq. 2) is replaed by onvolution in the dynami ase:

def X H (t) x 1 (t):

s ( t) =

l

q

q

q

l

i

i

q

l

l

l

l

ijk

i

i

ijk

l

ij

l

ij

l

l

ij

j

j

ij

l

l

ij

ij

l

l

l

i

ij

j

j

The derivative of s (t) with respet to the parameters is then given by:

s (t)

= H (t) x 1 (t)

l

i

l

i

Qlijk

Qlijk

=

Q

l

l

ij

j

Z +1

1

l

ijk

Z +1 H

()

l

Hij

u xjl

1 (t

(u) x 1(t

1 Q

(t) x 1 (t):

= H

Q

=

)du

l

ij

u

l

j

l

ijk

)du

u

l

ij

l

j

l

ijk

Therefore the spatiotemporal parameter update rule for arbitrary linear systems is given by

+

*

q

=

l

ijk

Æi ;

l

l

Hij

Q

7

l

ijk

x

l

j

1

:

(12)

Notie that the omputation involves a temporal onvolution (i.e., proessing by linear

system with impulse response H (t)=Q ).

To see how this might be aomplished, we onsider a speial ase analogous to the

bilinear weights onsidered in Se. 3.2. Here we take the impulse response H (t) to

be a linear superposition of omponent funtions h (t), whih ould be the impulse

responses of individual branhes of a dendriti tree. Let v (t) be the output of one

of these omponent lters:

def

v (t) = h (t) x 1 (t):

The oeÆients of the omponents of this superposition depend on the parameters

and ontext vetor. Thus,

X

H ( t) =

C h (t);

where

X

def

C

= TQ = Q :

Therefore,

X

H (t)

=

Q

h (t) = h (t):

Q

Q

Thus, the hange in the input to the ativation funtion is given by

s

=

h (t) x 1 (t) = v (t):

Q

l

l

ij

ijk

l

ij

l

ijk

l

ijk

l

l

l

ijk

ijk

j

l

l

l

ij

ijk

ijk

k

l

l

ijk

ijk

l

m

ijkm

m

l

ij

l

ijkm

m

l

ijkm k;m

l

l

ijkm

ijk

l

m

ijk

l

i

m

l

ijkm

l

l

ijk

j

l

m

ijk

The parameter update rule for a superposition of lters is then

= hÆ ; v i :

(13)

Notie that this requires v (t), the output from the omponent lters, to be saved,

so that an inner produt an be formed with Æ (t).

The delta values are omputed as before (Eqs. 8, 9), exept that all the quantities

are time-varying. Nevertheless, it may be helpful to write out the derivation for

hidden layer deltas (keeping in mind that the W +1 are linear operators):

X

s +1 (t)

=

W +10 x 0 (t)

s (t)

s (t) 0

= W +1x (t)=s (t)

= W +10[s (t)℄:

Thus we get the following delta values for spatiotemporal signals:

Æ (t) = 0 [s (t)℄[t (t) y (t)℄;

(14)

+1

X

Æ +1 (t)W +1 0 [s (t)℄

(for 0 l < N ):

(15)

Æ (t) =

q

l

l

l

ijkm

i

ijk

m

l

ijk

l

i

l

mi

l

m

i

l

l

i

N

N

q

q

i

i

i

i

Ll

l

m

l

l

mi

i

m

8

i

l

mi

i

l

i

l

l

i

mi

l

i

mi

=1

l

l

l

i

5 Referene

1. MaLennan, B. J. (1998). Mixing memory and desire: Want and will in neural

modeling. In: Karl H. Pribram (Ed.), Brain and values: Is a biologial siene

of values possible, Mahwah: Lawrene Erlbaum, 1998, pp. 31{42. (orreted

printing).

9