a (x )

реклама

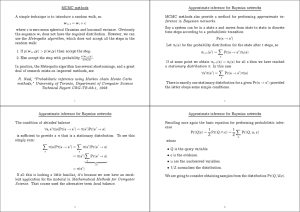

Outline • The map of mahine learning • Bayesian learning • Aggregation methods • Aknowledgments AM L Creator: Yaser Abu-Mostafa - LFD Leture 18 5/23 Probabilisti approah Hi Extend probabilisti role to all omponents P (D | h = f ) How about deides whih P (h = f | D) ? h UNKNOWN TARGET DISTRIBUTION P(y | x) target function f: X Y UNKNOWN INPUT DISTRIBUTION plus noise P( x ) (likelihood) x1 , ... , xN DATA SET D = ( x1 , y1 ), ... , ( xN , yN ) x g ( x )~ ~ f (x ) LEARNING ALGORITHM FINAL HYPOTHESIS g: X Y A HYPOTHESIS SET H Hi AM L Creator: Yaser Abu-Mostafa - LFD Leture 18 6/23 The prior P (h = f | D) requires an additional probability distribution: P (D | h = f ) P (h = f ) P (h = f | D) = ∝ P (D | h = f ) P (h = f ) P (D) P (h = f ) is the P (h = f | D) prior is the posterior Given the prior, we have the full distribution AM L Creator: Yaser Abu-Mostafa - LFD Leture 18 7/23 Example of a prior Consider a pereptron: A possible prior on w: h is Eah determined by wi w = w0, w1, · · · , wd is independent, uniform over [−1, 1] This determines the prior over Given D, we an ompute h - P (h = f ) P (D | h = f ) Putting them together, we get P (h = f | D) ∝ P (h = f )P (D | h = f ) AM L Creator: Yaser Abu-Mostafa - LFD Leture 18 8/23 A prior is an assumption Even the most neutral prior: Hi x is unknown x is random P(x) −1 −1 1 1 x Hi The true equivalent would be: Hi x is unknown x is random δ(x−a) −1 AM L Creator: Yaser Abu-Mostafa - LFD Leture 18 1 −1 a 1 x Hi 9/23 If we knew the prior ... we ould ompute P (h = f | D) for every =⇒ h∈H we an nd the most probable we an derive E(h(x)) we an derive the h given the data for every x error bar for every x we an derive everything in a prinipled way AM L Creator: Yaser Abu-Mostafa - LFD Leture 18 10/23 When is Bayesian learning justied? 1. The prior is valid trumps all other methods 2. The prior is irrelevant just a omputational atalyst AM L Creator: Yaser Abu-Mostafa - LFD Leture 18 11/23